Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

0:02

Hey everyone,

0:04

this is Tristan. We

0:06

have a special episode for you today

0:09

with computer scientist Oren Etsioni to talk

0:11

about a new tool to detect AI-generated

0:13

content. As AI technology

0:15

is getting better, the ability to tell

0:17

reality from unreality will only grow. Already

0:23

deepfakes are being used to scam people,

0:25

extort them, influence elections, and

0:27

there are lots of sites out there that claim to be able

0:29

to detect if a piece of content was created using AI. But

0:32

if you've used these sites, you know that they're unreliable,

0:34

to say the least. There are

0:36

also folks out there who are working to build better

0:38

tools, people who understand the science of

0:41

artificial intelligence, and want to see a future where

0:43

we can actually know what's real on the internet.

0:46

And one of those folks is Oren

0:48

Etsioni, who's the founding CEO of the

0:50

Allen Institute for Artificial Intelligence, and

0:52

his nonprofit trumedia.org has just launched their

0:54

state-of-the-art AI detection tool. And

0:57

I'll just say that here at the Center for

0:59

Humane Technology, we think it's critical not to just

1:01

point out problems, but highlight the important work that's

1:03

being done to help address these

1:05

problems. So I'm super excited to have Oren

1:07

on the show today to talk about that work. Oren,

1:10

welcome to your undivided attention. Thank

1:12

you, Tristan. It's a real pleasure to be here,

1:15

and as I'll explain in a minute, it's

1:17

particularly meaningful to have this conversation

1:19

with you personally. Well,

1:22

let's get right into that, because I

1:24

believe you and I first met actually

1:26

at the meeting in July 2023 with

1:28

President Biden about artificial intelligence. Could you

1:30

tell us that story? With

1:32

pleasure. I suddenly got an

1:34

email, and it was

1:37

an invitation to join President Biden,

1:39

Governor Newsom, some key members

1:41

of his staff in a small meeting in San

1:43

Francisco. And

1:45

the idea was for a few of us to

1:48

get together and share with him our

1:50

thoughts and ideas about AI to give

1:52

him a sense of what is most

1:55

important. And I'm probably one of the

1:57

more optimistic AI folks that you would

1:59

have whether

12:00

I can see the hands or not, I can squint

12:02

and glance and I can tell. So

12:04

we launched a quiz taking

12:07

social media items only, political deepfakes, they've

12:10

been posted on social media, and

12:12

we found that people typically cannot

12:14

tell. The New York Times did

12:16

multiple quizzes, a very recent one

12:18

with videos, previous one with faces.

12:21

When you take these quizzes, you

12:23

are quickly humbled. You cannot tell.

12:25

So the fact of the matter

12:27

is, even in the current state

12:29

of technology, and as you pointed out, Tristan,

12:31

it keeps getting better, people are deluding themselves

12:34

if they think they can tell. Yeah,

12:36

and I think it's just so important for people to

12:38

remember that I remember the days when I would see

12:40

deepfakes and it causes alarm when you

12:43

see where it goes, but you would always say, but at the

12:45

end of the day, I can still tell that this is still

12:47

generated by a computer. And I think

12:49

in many areas of AI, whether it's

12:51

AI capabilities and biology and chemistry and

12:53

math and science and generating

12:56

fake media, we look at

12:58

the capabilities today and we say, oh, but see, it

13:00

fails here, here and here. And then we say, so

13:02

see, there's nothing really to be worried about. But

13:04

if you look at the speed at which this is going, we

13:07

don't want to have guardrails after the capabilities are

13:09

so scaled up. We wanna really get those guardrails

13:11

in now. So I'm so

13:13

grateful that you've been doing this work. I thought

13:15

for a moment what we do is just set

13:18

the table a little bit because there's a whole

13:20

ecosystem of players in this space and there's different

13:22

terms people throw around. Watermarking

13:24

media, the provenance of media,

13:26

disclosure, direct disclosure, indirect disclosure versus detection

13:28

of things. Could you just sort of

13:30

give us a little lay of the

13:32

land of the different approaches that are

13:34

in this space? I think President Biden's

13:36

executive order called for watermarking of media.

13:39

So all these terms like

13:42

provenance and watermarking

13:44

and others refer to

13:46

technologies that attempt to

13:48

stamp, to track

13:50

the origin of a media item. I'll just

13:52

use image for simplicity and

13:55

to track changes to it and

13:57

to give you that information upfront.

13:59

That's... you

20:00

can create a deep fake 100% of the time. You

20:04

cannot accurately detect a deep fake 100% of the

20:06

time. And to get it to 100% takes years

20:08

and years and

20:10

years of research that now you or an have

20:12

signed up for your organization and nonprofit having to

20:14

do all of this work to get to closer

20:16

and closer and closer. So wherever

20:18

there's these asymmetries, we should be investing

20:21

in the defensive first rather

20:23

than just proliferating all these new

20:25

AI offensive capabilities into society. So,

20:32

Orin, I think what we really want to do

20:34

in this podcast is paint a picture of how

20:36

we get to the ideal future. And you're working

20:38

hard on building one tool, but as you said,

20:40

it's not a silver bullet and it's just one

20:43

tool of a whole ecosystem of

20:45

solutions. Do you have kind of an

20:47

integrated idea of the sweeter ecosystem of things that you

20:49

would love to see if we treated this problem as

20:51

kind of the Y2K for

20:54

reality breaking down? I

20:57

think ecosystem is exactly the right phrase

20:59

here because I think that we all

21:01

have a role to play. I

21:03

think regulators, as we just saw in

21:06

California and hopefully will become federal or

21:08

in many states follow California. And then

21:10

when you have a patchwork of regulations

21:13

at the state level, sometimes it's elevated

21:15

to a federal and even international regulation.

21:17

So I think that's an important component.

21:20

It needs to be done right, right?

21:22

There's a balance here of the burden

21:24

on the companies on protecting free speech

21:27

rights, but at the same time creating

21:30

an appropriate world for us, particularly

21:32

in these extreme harm cases like

21:34

politics, non-consensual pornography, et cetera. So

21:37

I think that's incredibly important. Once

21:39

you have those, you need the

21:41

tools to then detect that

21:44

something's fake, whether it's watermarking, post hoc

21:46

detection, like we do at tromedia.org or

21:49

a combination of both, you can't

21:51

have regulations on the books without

21:53

enforcement. So I think regulation and

21:55

enforcement go hand in hand. I

21:58

really hope that in that context,

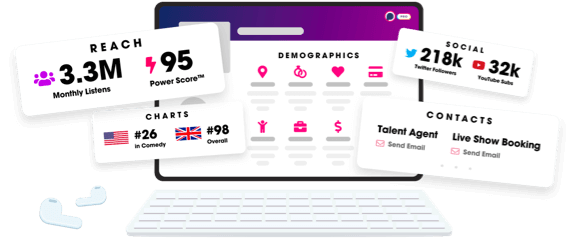

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us